Exploring Live Streaming on the Browser: Protocols and Latency Considerations

Introduction:

Throughout my journey, I've encountered different streaming protocols like HTTP Live Streaming (HLS), Dynamic Adaptive Streaming over HTTP (DASH) and Real-Time Messaging Protocol (RTMP). Each has its quirks, strengths, and challenges. I'll break it all down for you in a way that's easy to understand.

We will also talk about Jsmpeg—a JavaScript-based solution that caught my attention. It harnesses the power of JavaScript, WebGL, and the HTML5 canvas element to provide a seamless, low-latency streaming experience directly in your browser. It's like magic! I'll be sharing my thoughts and experiences on this innovative technology and exploring its potential applications.

Table of Contents:

Understanding Live Streaming

Streaming Protocols: A Deep Dive

HTTP Live Streaming (HLS)

Dynamic Adaptive Streaming over HTTP (DASH)

Real-Time Messaging Protocol (RTMP)

Jsmpeg: Exploring an Innovative JavaScript-based Solution

Latency in live-streaming

What is Latency?

Factors Affecting Latency

Comparing Latency Across Streaming Protocols

Benchmarking and Performance

Measuring Latency: Tools and Techniques

Performance Considerations and Optimization Strategies

Conclusion

Understanding Live Streaming

Live streaming has revolutionized the way we consume video content, enabling real-time delivery of audio and video over the internet. It allows viewers to experience events, performances, presentations, and more, as they happen, regardless of their physical location. Understanding the fundamentals of live streaming is crucial for building successful streaming applications and delivering seamless experiences to your audience.

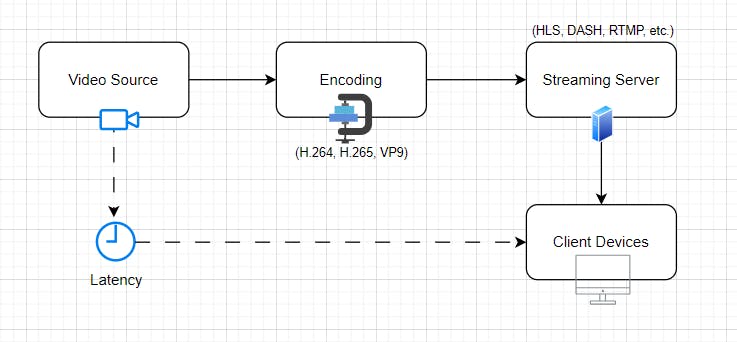

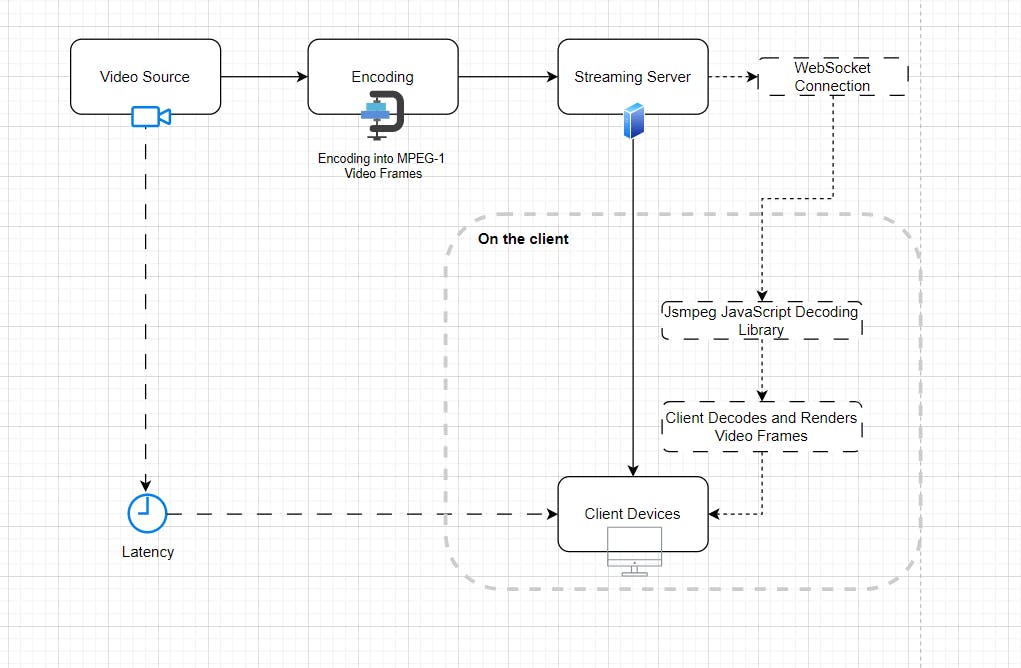

At its core, live streaming involves the transmission of audio and video data from a source to multiple viewers in real time. This process requires specialized protocols, encoding techniques, and infrastructure to ensure smooth and uninterrupted playback. Let's explore the key components involved in understanding live streaming:

Video Source: The video source is the origin of the live content. It can be a camera capturing a live event, a computer screen displaying a presentation, or any other device or application generating the video feed.

Encoding: Before the video can be streamed, it needs to be encoded. Encoding involves compressing the video data into a format that can be efficiently transmitted over the internet. Common video codecs used for live streaming include H.264, H.265, and VP9.

Streaming Server: A streaming server acts as an intermediary between the video source and the viewers. It receives the encoded video stream from the source and distributes it to multiple viewers over the internet. The server manages the streaming protocols, handles client connections, and ensures the efficient delivery of the video data.

Streaming Protocols: Streaming protocols define the rules and methods for transmitting video data over the internet. Different protocols have different features, compatibility, and support for various devices and platforms. Some popular streaming protocols include HTTP Live Streaming (HLS), Dynamic Adaptive Streaming over HTTP (DASH) and Real-Time Messaging Protocol (RTMP).

Client Devices: Client devices are the end-user devices that receive and play the live stream. These devices can include web browsers, mobile phones, tablets, smart TVs, or dedicated streaming devices. The client devices need to support the streaming protocol used by the server and have the necessary media players or applications to decode and display the video stream.

Latency: Latency refers to the delay between the time the video is captured and the time it is displayed on the viewer's screen. Low latency is crucial for real-time applications such as live sports, gaming, or interactive events, as it reduces the delay between the actual event and the viewer's perception.

By understanding these fundamental components, you can navigate the complexities of live streaming and make informed decisions when building streaming applications. Each component plays a vital role in delivering high-quality and real-time video experiences to your audience. In the following sections, we'll explore different streaming protocols and dive deeper into the concept of latency, equipping you with the knowledge to create exceptional live-streaming applications.

Streaming Protocols: A Deep Dive

In the realm of live streaming on the browser, various protocols play a crucial role in delivering real-time video content. Understanding these protocols is essential for selecting the right approach for your streaming application. In this section, we'll take a deep dive into some of the prominent streaming protocols:

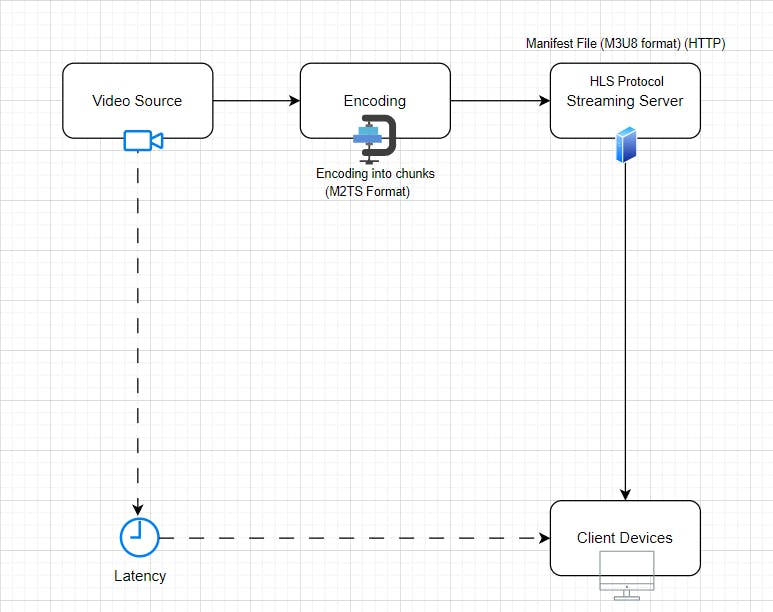

HTTP Live Streaming (HLS): HLS, developed by Apple, is an adaptive streaming protocol that breaks the video stream into small segments and delivers them over HTTP. It offers broad compatibility with browsers and devices, making it widely adopted. HLS adjusts the video quality dynamically based on the viewer's network conditions, ensuring a smooth streaming experience. It supports encryption for content protection and enables features like DVR-like functionality for live streams. Flowchart:

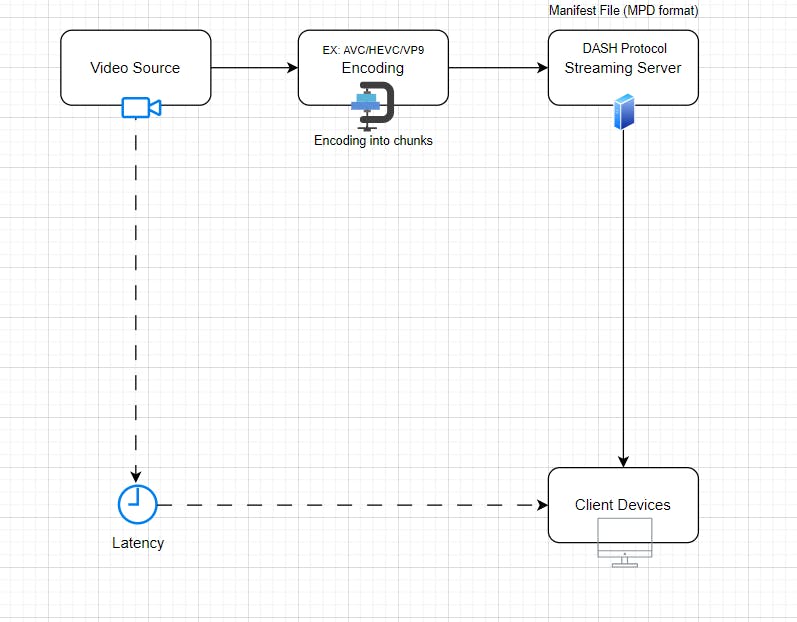

Dynamic Adaptive Streaming over HTTP (DASH): DASH is a widely adopted adaptive streaming protocol standardized by the MPEG consortium. It divides the video into small segments and dynamically adjusts the quality based on the viewer's network conditions. DASH provides flexibility by supporting various codecs and enables seamless streaming across different devices and platforms. It offers features like multi-language audio tracks and subtitles while allowing content providers to implement their preferred content protection mechanisms. Flowchart:

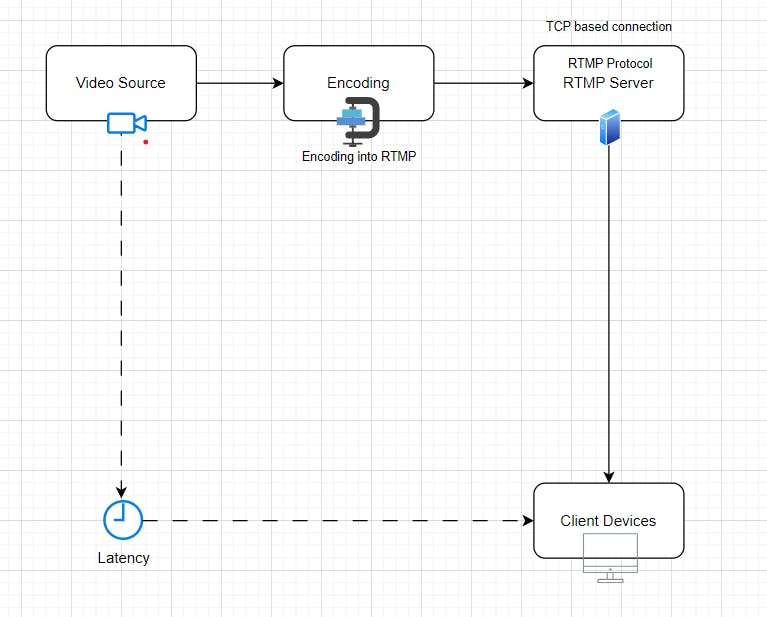

Real-Time Messaging Protocol (RTMP): RTMP, developed by Adobe, is a protocol designed for real-time streaming and interactive applications. It enables the transmission of audio, video, and data between a streaming server and a client. RTMP is known for its low latency and interactive capabilities, making it popular for applications like live gaming and interactive streaming platforms. However, native browser support for RTMP is limited, often requiring additional plugins or media players. Flowchart:

These protocols represent a range of options for streaming video content on the browser, each with its advantages and considerations. The choice of protocol depends on factors such as target platforms, required features, latency requirements, and browser compatibility.

In addition to these established protocols, there are also innovative solutions like Jsmpeg, which is a JavaScript-based library specifically designed for MPEG-1 video streaming on the browser. While not a protocol itself, Jsmpeg utilizes appropriate communication protocols like WebSocket for real-time data transmission. It leverages JavaScript decoding and the HTML5 Canvas element to render MPEG-1 video frames on the browser, providing a lightweight and browser-native approach for streaming MPEG-1 video content without additional plugins or media players.

Understanding these streaming protocols empowers you to make informed decisions when building your live streaming applications, ensuring optimal compatibility, performance, and user experience. In the next section, we will delve into the concept of latency in live streaming and its significance in delivering real-time experiences.

Latency in live-streaming

Latency refers to the time delay between an event happening in real-time and its corresponding display or playback on the client device during live streaming. It is an important aspect of live streaming as it impacts the real-time interaction and responsiveness of the streaming experience.

Factors Affecting Latency

Several factors can contribute to latency in live streaming:

Encoding and Processing: The time it takes to encode and process the video stream before it is ready for transmission can introduce latency. The complexity of the encoding algorithm and the computational resources available can affect the encoding time.

Transmission and Network: The transmission of the video stream over the network introduces additional latency. Factors such as network congestion, bandwidth limitations, and packet loss can impact the delivery speed of the video stream to the client devices.

Protocol and Technology: Different streaming protocols and technologies have varying levels of inherent latency. Some protocols prioritize low latency, while others prioritize reliability or quality. The choice of protocol and technology used for streaming can influence the overall latency experienced by viewers.

Buffering and Playback: Client devices typically buffer a certain amount of video data before playback begins. Buffering helps to mitigate interruptions caused by network fluctuations but can introduce additional latency. The size of the buffer and how it is managed can affect the overall latency in live streaming.

Comparing Latency Across Streaming Protocols

When comparing latency across different streaming protocols, it's important to consider their design and underlying technology. Here is a general comparison of latency levels for common streaming protocols:

HTTP Live Streaming (HLS): HLS is a widely adopted streaming protocol for delivering video content over HTTP. It uses segmented streaming and adaptive bitrate techniques, which can introduce higher latency compared to real-time protocols. HLS typically has latency in the range of tens of seconds, making it more suitable for non-interactive streaming scenarios.

Dynamic Adaptive Streaming over HTTP (DASH): DASH is another HTTP-based streaming protocol that supports adaptive bitrate streaming. It offers latency similar to HLS, with tens of seconds of delay. DASH focuses on providing high-quality streaming with adaptive bitrate switching rather than low latency.

Real-Time Messaging Protocol (RTMP): RTMP is known for its low latency and is commonly used for interactive live streaming applications. It offers end-to-end latency in the range of a few seconds, making it suitable for real-time communication.

Jsmpeg leverages WebSockets for low-latency video streaming. Its latency can be in the range of a few hundred milliseconds to a few seconds, depending on factors such as network conditions and server configuration.

It's important to note that these latency levels are general estimates and can vary depending on various factors, including the specific implementation, network conditions, and the configuration of the streaming system.

When choosing a streaming protocol, it is essential to consider the latency requirements of your specific use case. Low-latency protocols like RTMP and Jsmpeg are suitable for applications requiring real-time interaction, while protocols like HLS and DASH are better suited for non-interactive streaming scenarios where quality and scalability are prioritized.

By understanding the factors that contribute to latency and comparing latency levels across different protocols, you can make informed decisions when implementing live streaming solutions.

Benchmarking and Performance

When it comes to live streaming, benchmarking and performance optimization are crucial for ensuring a smooth and reliable streaming experience. Let's explore how you can measure latency, tools and techniques for benchmarking, performance considerations and optimization strategies.

Measuring Latency: Tools and Techniques

Network Monitoring Tools: Use tools like Ping, Traceroute, or dedicated network monitoring software to gauge round-trip time (RTT) between server and client. These tools uncover network latency and pinpoint bottlenecks.

Client-Side Timing: Track timestamps from video capture to rendering on the viewer's device. This helps measure encoding, transmission, and decoding latency by analyzing time gaps at various streaming stages.

Performance Considerations and Optimization Strategies

Video Encoding: Optimize the encoding settings to strike a balance between video quality and latency. Adjust parameters such as bitrate, frame rate, and encoding complexity to achieve the desired streaming performance. Utilize hardware acceleration or specialized encoding libraries to speed up the encoding process.

Adaptive Bitrate Streaming: Implement adaptive bitrate streaming techniques to dynamically adjust the video quality based on the network conditions. This helps mitigate buffering and latency issues by providing the optimal video bitrate for each client device and network connection.

Caching and Prefetching: Implement caching mechanisms to store frequently accessed video segments closer to the clients. This reduces the need for frequent retrieval from the origin server, improving response time and reducing latency. Prefetching techniques can also be employed to anticipate and load future segments in advance.

Server-Side Optimization: Optimize the server infrastructure and software stack for efficient handling of streaming requests. Utilize load balancing, horizontal scaling, and caching mechanisms to distribute the streaming workload and improve response times.

Measure latency, use benchmarks, and tweak performance for smooth streaming. Stay alert to network changes and adapt for a responsive viewer experience.

In conclusion:

We explored various streaming protocols such as HTTP Live Streaming (HLS), Dynamic Adaptive Streaming over HTTP (DASH), and Real-Time Messaging Protocol (RTMP). Each protocol has its strengths and considerations in terms of latency, compatibility, and functionality.

Additionally, we delved into Jsmpeg, an innovative JavaScript-based solution for live streaming. Jsmpeg leverages the power of JavaScript, WebGL, and the HTML5 canvas element to provide a low-latency streaming experience. It supports real-time encoding and rendering of video content directly in the browser, making it an attractive option for applications requiring low latency and real-time interaction.

We discussed benchmarking and performance optimization strategies, including measuring latency using network monitoring tools and client-side timing. We also explored various performance considerations and optimization techniques such as video encoding optimization, adaptive bitrate streaming, Caching and Prefetching and server-side optimization.

By implementing these strategies and understanding the specific requirements of your streaming application, you can create a smooth and responsive streaming experience for your viewers, minimizing latency and enhancing the overall performance.